Model Tag:

llava-phi3

📋

A compact LLaVA model has been fine-tuned based on Phi 3 Mini.

Last Updated

2024-05-07 (1 year ago)

Exploring LLaVa Phi-3: A Vision model based on Phi-3

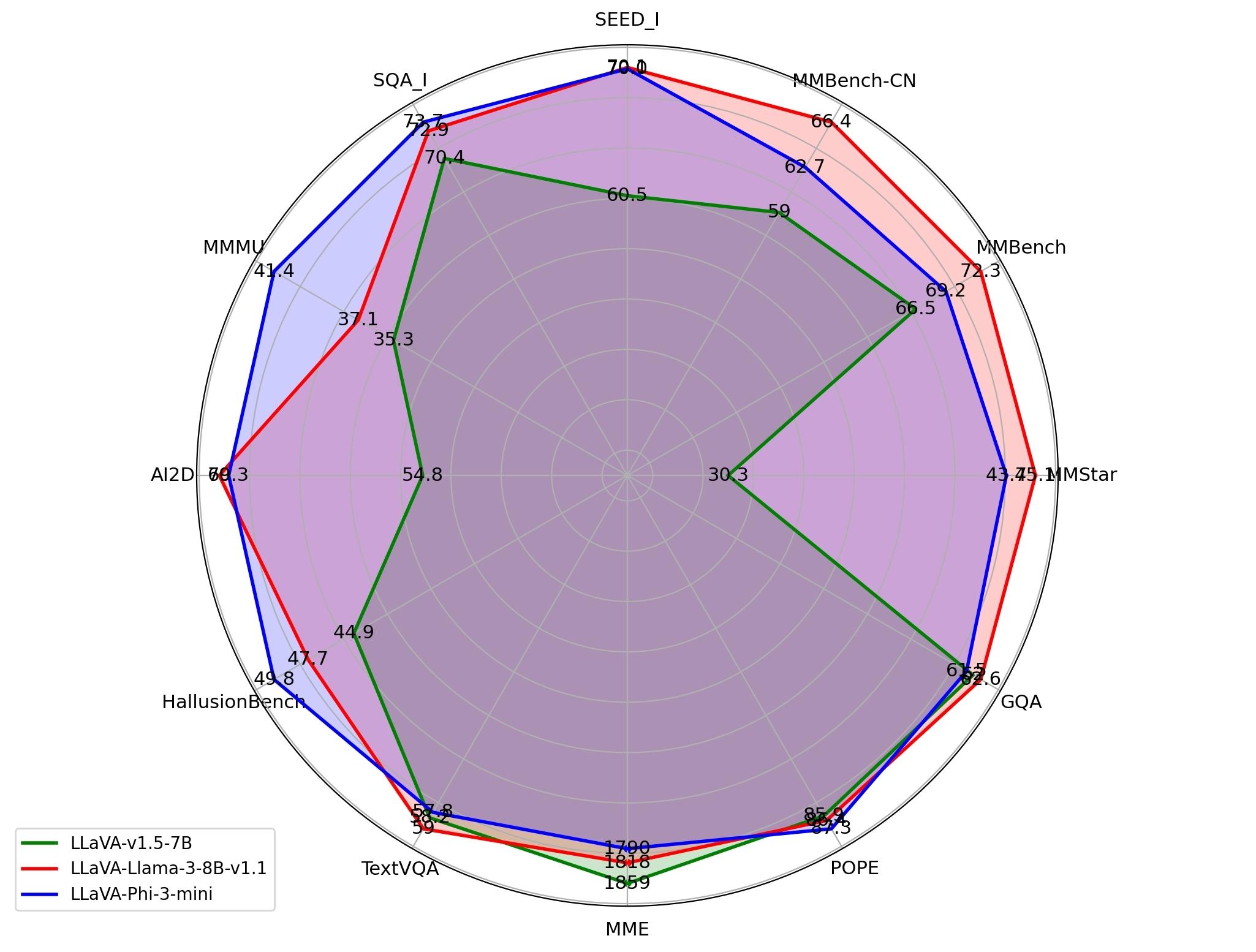

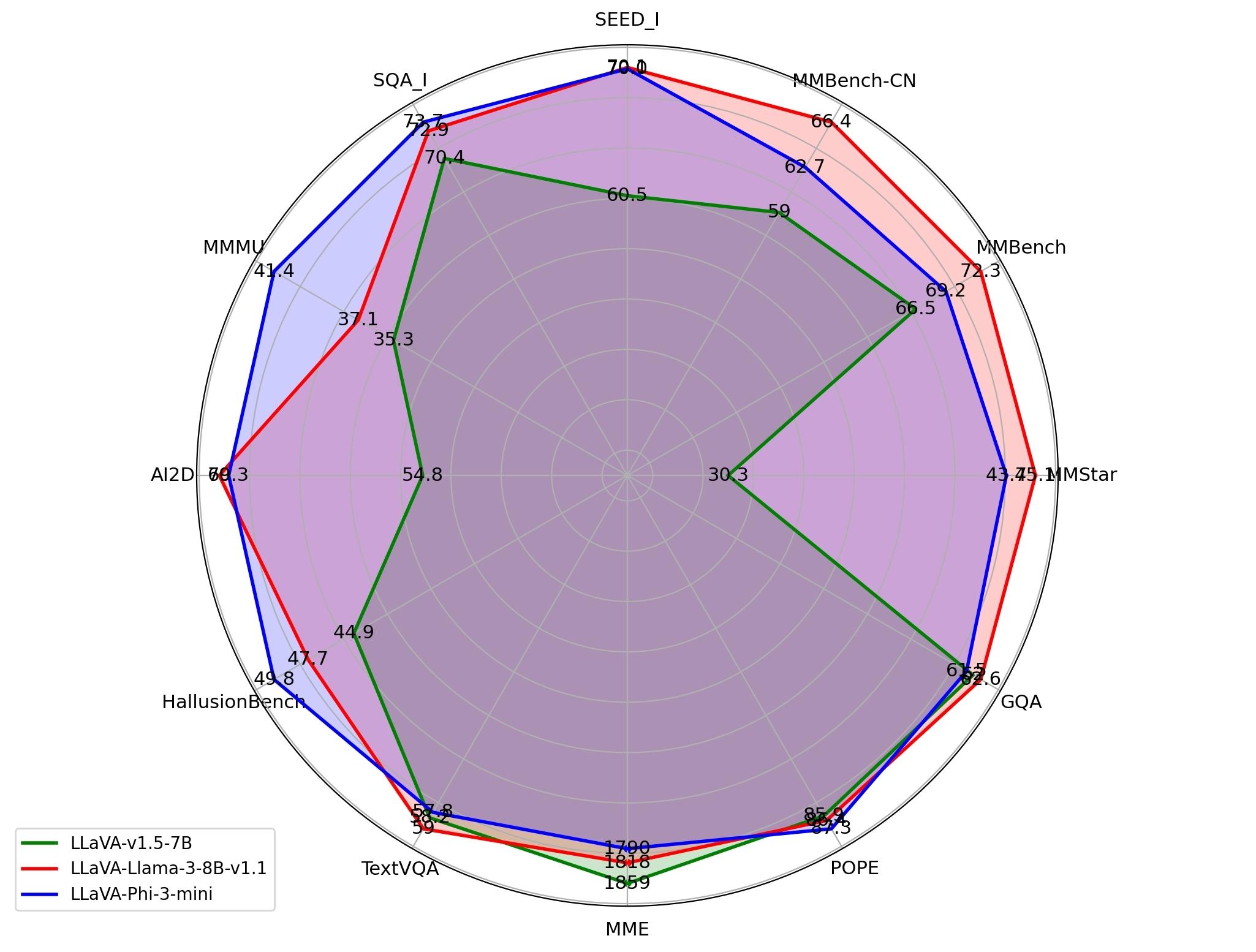

LLaVa Phi-3 is a compact multimodal model that has garnered attention for its impressive performance benchmarks, comparable to that of its predecessors. This article delves into the details of LLaVa Phi-3, its capabilities, and how it can be utilized effectively on local machines.

What is LLaVa Phi-3?

LLaVa Phi-3 is a language model that has been fine-tuned from the Phi 3 Mini 4k version. Designed for efficiency without compromising on performance, it stands out for its ability to handle various natural language processing tasks seamlessly. The model has been tailored to foster a better understanding of context and nuance, enabling it to generate human-like text responses with great accuracy.

Key Features of LLaVa Phi-3

- Compact Design: Despite its small size, LLaVa Phi-3 is engineered to deliver robust performance, making it suitable for local deployment.

- High Performance: The model exhibits strong performance benchmarks that rival those of larger models, positioning it as a reliable choice for developers and researchers.

- Versatility: It is capable of performing a range of tasks, from conversational AI applications to more complex text generation needs.

- Integration with Braina AI: Users can take advantage of Braina AI’s capabilities for local inference, enabling smooth operation on both CPU and GPU.

Application Areas

LLaVa Phi-3 can be utilized across various domains, including:

- Customer Support: Automating responses to frequently asked questions and providing assistance in real-time.

- Content Creation: Assisting writers and marketers in generating creative content ideas and drafts.

- Education: Offering personalized tutoring experiences by generating informative responses tailored to individual learning needs.

- Research: Helping researchers by summarizing large volumes of text and extracting key information.

LLaVa Phi-3 represents a leap forward in the development of local multimodal language models that are both efficient and capable. Whether you're a developer, researcher, or business looking to incorporate advanced AI functionalities, LLaVa Phi-3 offers a wealth of opportunities. By utilizing Braina AI, you can seamlessly deploy this model on your local setup and explore its vast potential in natural language understanding and generation.